Database

created: 18 January 2021

modified: 03 March 2022

revision: 3

Normalization

- Normalization requires queries to be based on the index (in order to be fast).

- Requiring existence of “the key” ensures that the table is in 1NF;

- Requiring that non-key attributes be dependent on “the whole key” ensures 2NF;

- Requiring that non-key attributes be dependent on “nothing but the key” ensures 3NF.

- While this phrase is a useful mnemonic, the fact that it only mentions a single key means it defines some necessary but not sufficient conditions to satisfy the 2nd and 3rd Normal Forms. Both 2NF and 3NF are concerned equally with all candidate keys of a table and not just any one key.

- Not good for reportings => either denormalized or Views

- Denormalization - the process of improving read performance, at the expence of writing, by adding redundant copies.

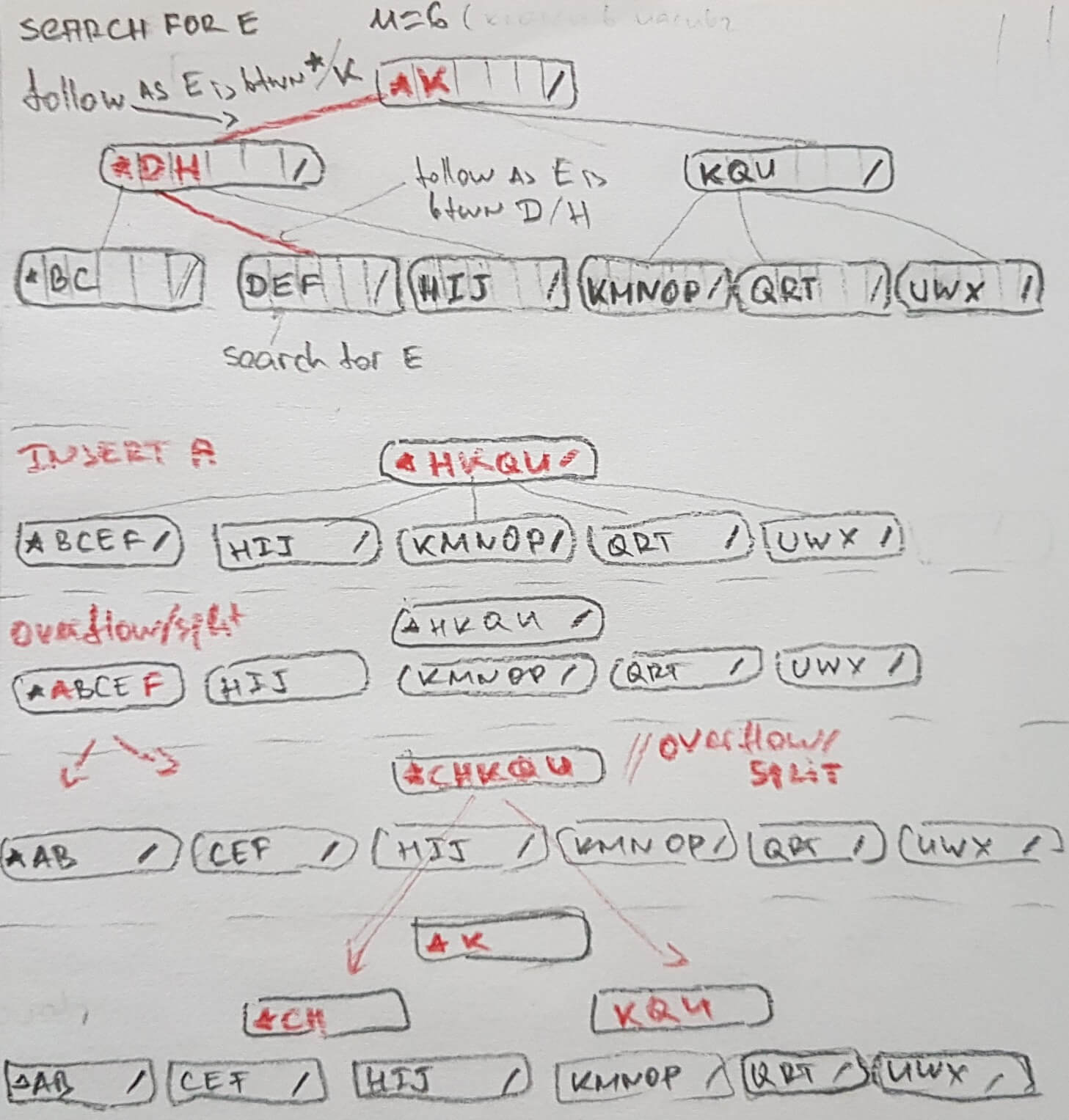

B/B+ Tree

- B tree has key-value nodes

- B+ tree has key nodes as roots and internals, and values are contained in leaf(external) nodes only. Therefore internal nodes keys, are repeated in leafs too.

- can use binary search on keys in current node.

- example implementations at Sedgewick

- (Sedgewick, 2011)

Bloom Filter

- (Talbot, 2015)

- Hashing filter, can have false positive, but not true negatives.

- Contains a bit array and several hashing functions each of which maps an element to an element in the array, generating random uniform distribution.

- No removal from the filer, as this can generate false negatives.

- Example: Bloom filter with 10 buckets and 3 has hash functions, that will hold home pets of John.

- put Cat: the 3 hash functions return 3,4,10 =>

[-,-,3,4,-,-,-,-,-,10] - put Dog: the 3 hash functions return 5,2,1 =>

[1,2,3,4,5,-,-,-,-,10] - has Cat? hashes are 3,4,10 => YES

- has Dog? hashes are 5,2,1 => YES

- has Elephant? hashes are 10,6,7 => NO as buckets 6 and 7 are empty

- has Monkey? hashes are 10,2,1 => YES, but this is

FALSE POSITIVE

- put Cat: the 3 hash functions return 3,4,10 =>

FALSE POSITIVErate can be controlled with the number of buckets (e.g. filter size)

LSM - Log Structured Merge Tree

- (Petrov, 2019)

- keeping a cascade of SSTables that are merged in the background

- Similar to B-tree, but optimized for sequential disk access + full occupancy

- (SSTables) Sorted String Tables - persistent ordered immutable map. (TODO: check if mapping with The HBase files is 1:1)

- Index Block - a primary key and an offset pointing to the offset in data block, where record can be found. Implement with structure optimized for quick search like B-tree, SkipList etc.

- Data Block

- Since SSTable is immutable, insert, update or delete operations would require rewriting the whole le, since it’s optimized for reads, written sequentially and has no reserved empty space that would allow any in-place modifications. That’s where the LSM Trees come into play.

- every data item has a timestamp associated with it.

- In LSM Trees, all the writes are performed against the mutable in-memory data structure - the SSTable in for og B-Tree or SkipList or else.

- no competition between reads and writes on files.

- writes: on threshold condition (time or size) data is written to disk (flushed) in the form of SSTable.

- reads: data from all SSTables on disk and the one in the memory requires merge to reconcile data.

- compaction the process of merging, removing redundant and deleted records and so on.

- Takes several SSTable files and merges them together

- shadowing key marked for deletion are not returned, of 2 records with same key the one with bigger timestamp is used returned

- Precaution : LSM-Trees might result into worse latency, since both CPU and IO bandwidth is spent re-reading and merging tables instead of just serving reads and writes. It’s also possible, under a write-heavy workload, to saturate IO by just writes and flushes, stalling the compaction process. Lagging compaction results into slower reads, increasing CPU and IO pressure, making the matters worse. This is something to watch out for.

R-Tree - for multidimensional indexes

DB Data structures in general

- (Petrov, 2019)

- Keeping the data structure immutable favors the sequential writes: data is written on disk in a single pass, append-only.

- Also data can be read from the disk without any segment locking between operations, which significantly simplifies concurrent access.

- In contrast, mutable data structures employ hierarchical locks and latches in order to ensure on disk data structure integrity, allow multiple readers at the same time but give exclusive ownership for parts of tree to writers.

- However, since amount of allocated files constantly grows, immutable data structures have to merge and rewrite files in order to make sure that the least amount of files is hit during the query, as the requested record might be spread across multiple files.

- On the other hand, mutable files may have to be rewritten partially or completely to decrease fragmentation, merge overflow areas and reclaim space occupied by updated or deleted records (as their new contents were written elsewhere).

HBase

Basic Units

- Column, referred as

family:qaulifier- can have many versions

- each value is contained in a cell + timestamp

- Column Families, made of 1+ columns. Keep N of families low, in order of tens, do not change them often.

- Row, made of 1+ Columns, referred with unique row_key (byte array)

- row_key is primary index

- access to row is atomic

- Table, made of 1+ rows sorted by lexicographically by row_key

- Namespace, made of 1+ tables

- Example: Java-Like structure

// (Table, RowKey, Family, Column, TimeStamp) = Value

SortedMap<RowKey, List<SortedMap<Column, List<Value, Timestamp>>>>

- Example: Table-like structure

| Row_Key | TimeStamp | Column Data | Column Meta Type | Column Meta Size | Column Counter/Updates |

|---|---|---|---|---|---|

| “ro1” | time 3 | some json | 2323 | 1 | |

| time 6 | new json | 2 | |||

| time 8 | “app/json” | ||||

| time 9 | new json2 | 3 |

Scalability

- Region, basic unit of scalability and load-balancing

- made of rows sorted together

- equivalent to range partition in DB sharding

- Minor/Major Compaction : Merge of HFiles on the disk

- rewrites all files within a column family

- can drop deleted entires

- region-server -> 1+ region

- requires master server

conf/hbase.xmloverrideshbase-common/src/main/resource/hbase-default.xml.- A lot of documentation in the latter place!

- Strong Consistency

API

- WAL: Write-ahead log

- pp. 114 examples

- chapter 3 for CRUD operations

Others

- (Braginsky et al., n.d.) Accordion: Uses

CompactionMemStorethat performs in-memory compaction (withCellArrayMap), instead of the default MemStore (withConcurrentSkipListMap). - (Dimiduk, 2014) HBase maintains 2 cache structures:

MemStoreandBlockCacheMemStore(SkipList structure) accumulates data edits as they’re received, buffering them in memory. Useful for accessing recent edits.BlockCachekeeps data blocks resident in memory after they’re read. Useful for serving random access data.

- (Dimiduk, 2014) HBase BlockCache.

- The smallest I/O component that HBase can read from HFile

- 4 varieties: DATA (user data), META(info about the HFile itself), INDEX(speed up read, with index over cells in DATA blocks), BLOOM(speed up read, with Bloom filter)

- Single

BlockCacheinstance per region server. - as per 2014 3 implementations:

LruBlockCache(default, in java heap only),SlabCache(again LRU algorithm, but with off-heap cache - DirectByteBuffers),BucketCache(3 modes, heap, off-heap, file) SlabCacheandBucketCacheare used for multi-level caching. For example withLruBlockCacheon L1 and another on L2, or use L1 for Bloom and Index.. see reference for more info.

Cache

- (“Cache Replacement Policies,” n.d.) Cache replacement policies - see reference

References

- Cache replacement policies. In Wikipedia. Retrieved January 21, 2021, from https://en.wikipedia.org/w/index.php?title=Cache_replacement_policies

- Braginsky, A., Hillel, E., & Bortnikov, E. Accordion: HBase Breathes with In-Memory Compaction. https://blogs.apache.org/hbase/entry/accordion-hbase-breathes-with-in

- Dimiduk, N. (2014). HBase BlockCache 101. In Cloudera Blog. https://blog.cloudera.com/hbase-blockcache-101/

- George, L. (2011). HBase : the definitive guide. O’Reilly.

- Petrov, A. (2019). Database internals. O’Reilly Media.

- Sedgewick, R. (2011). Algorithms. Addison-Wesley.

- Talbot, J. (2015). What are Bloom filters? In Medium. https://blog.medium.com/what-are-bloom-filters-1ec2a50c68ff